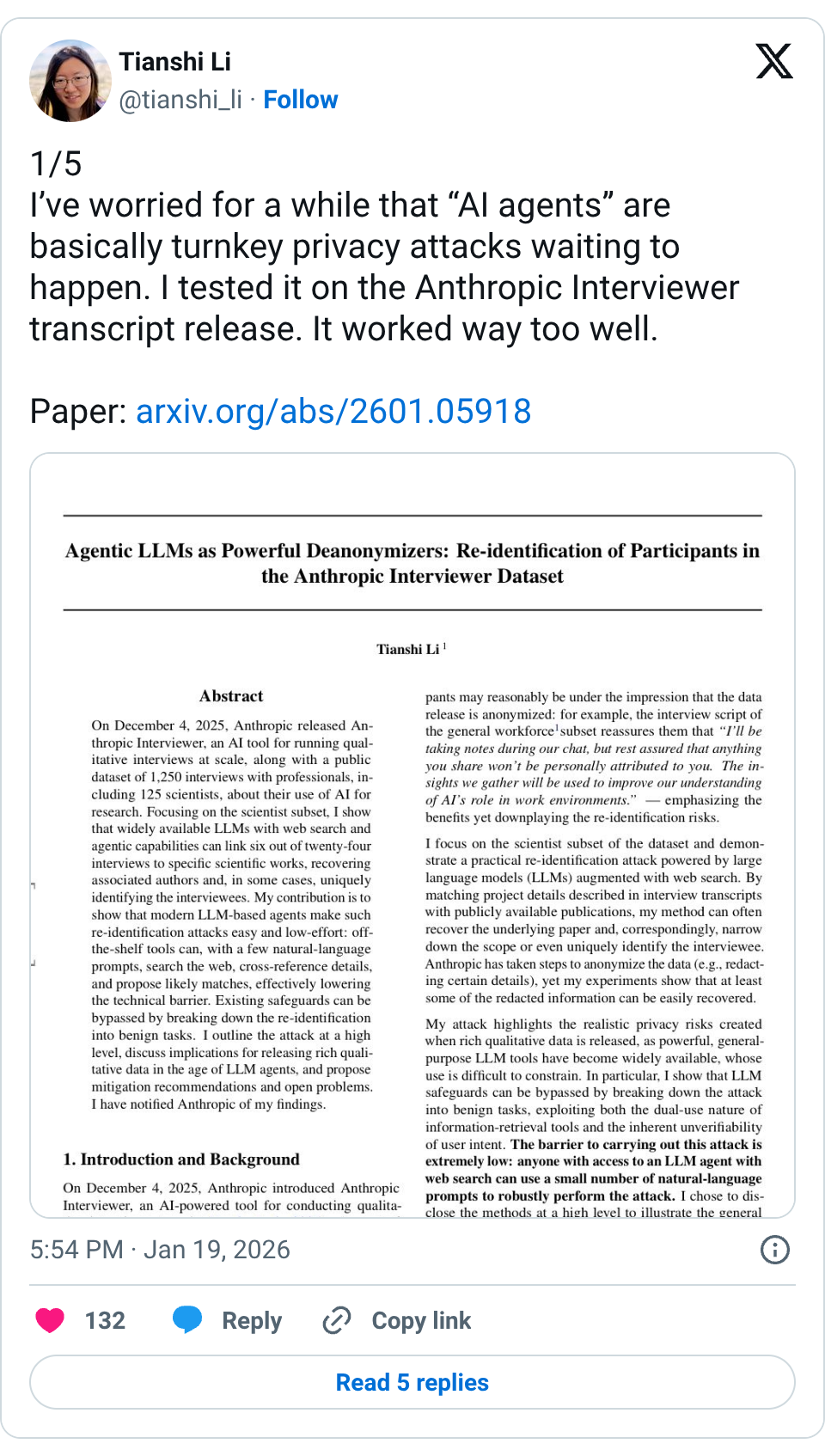

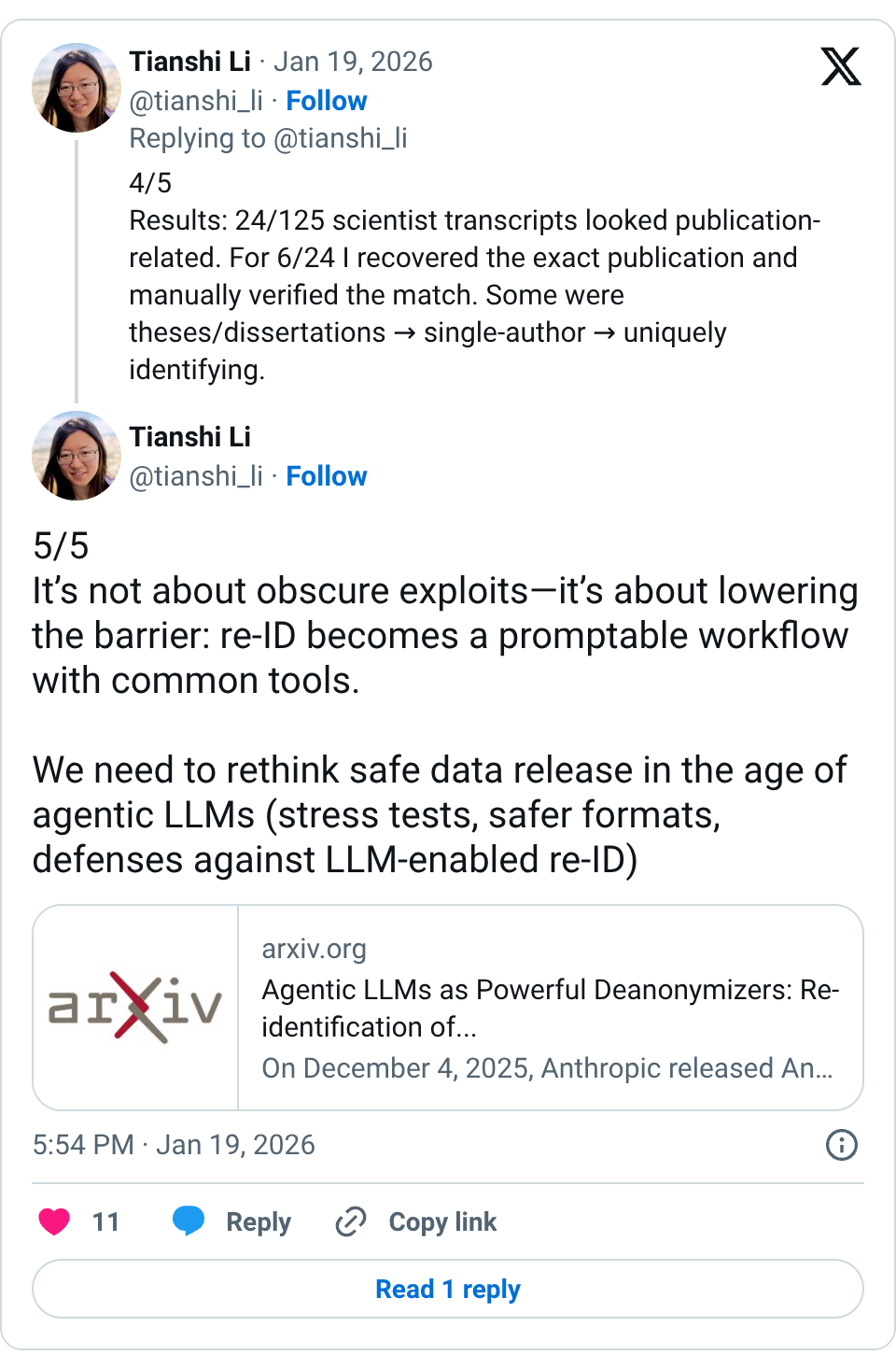

🧵 1/5 I’ve worried for a while that “AI agents” are basically turnkey privacy attacks waiting to happen. I tested it on the Anthropic Interviewer transcript release. It worked way too well. Paper: https:// arxiv.org/abs/2601.05918 🧵 2/5 Anthropic released 1,250 AI-led interviews (125 w/ scientists) with identifiers redacted. My goal was to measure residual re-identification risk. 🧵 3/5 Method (high level): (1) spot interviews likely describing a specific published work; (2) use a web-augmented LLM to search + rank candidate publications with confidence estimates. 🧵 4/5 Results: 24/125 scientist transcripts looked publication-related. For 6/24 I recovered the exact publication and manually verified the match. Some were theses/dissertations → single-author → uniquely identifying. 🧵 5/5 It’s not about obscure exploits—it’s about lowering the barrier: re-ID becomes a promptable workflow with common tools. We need to rethink safe data release in the age of agentic LLMs (stress tests, safer formats, defenses against LLM-enabled re-ID)

Thread Screenshots

Images