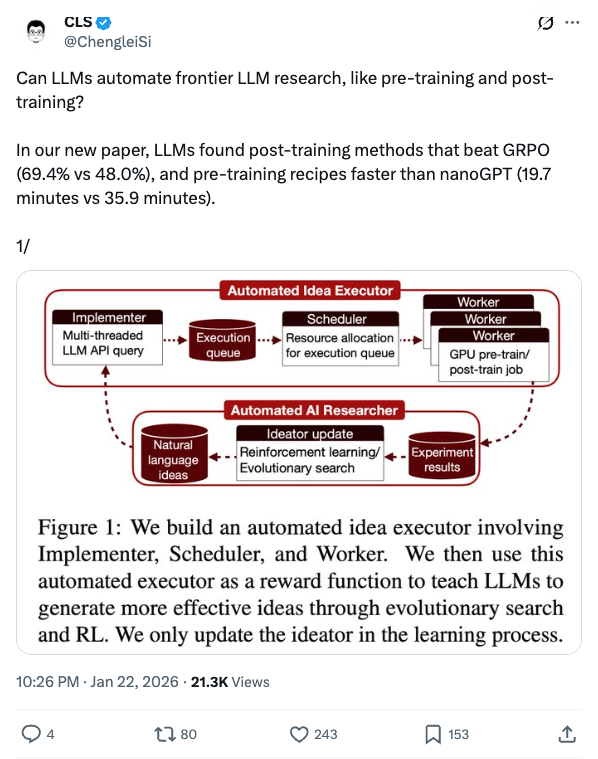

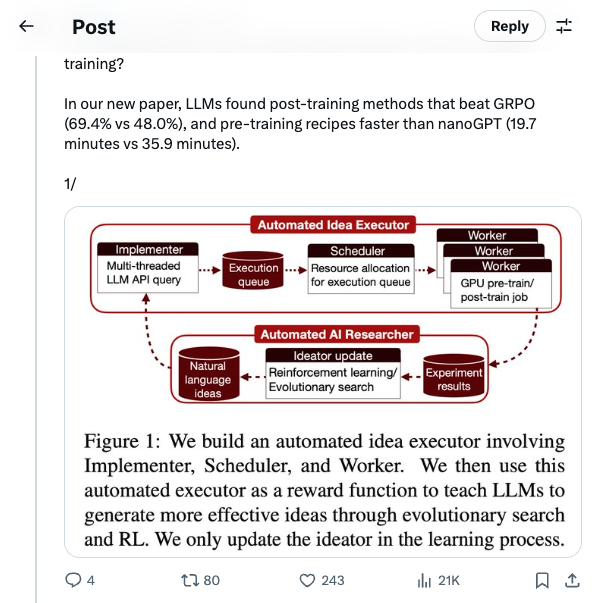

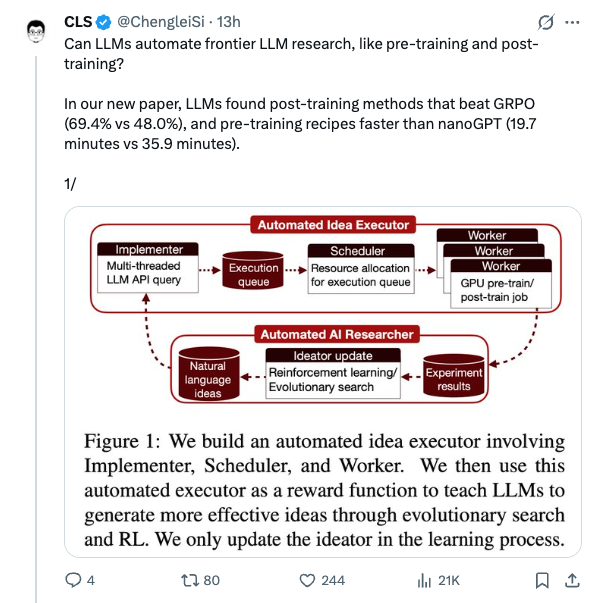

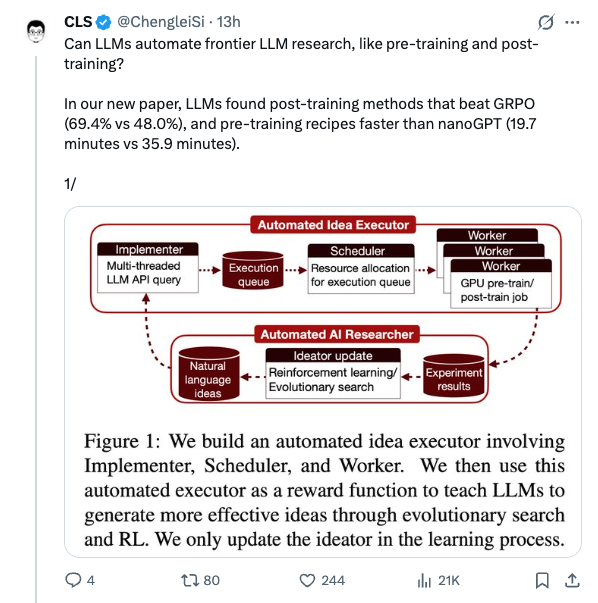

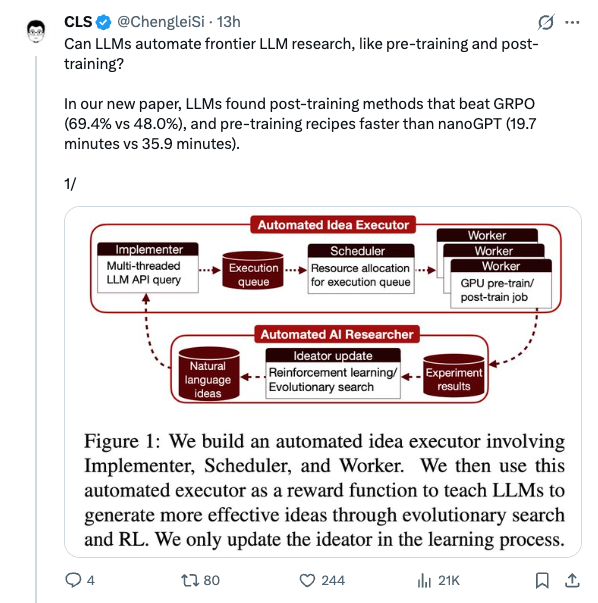

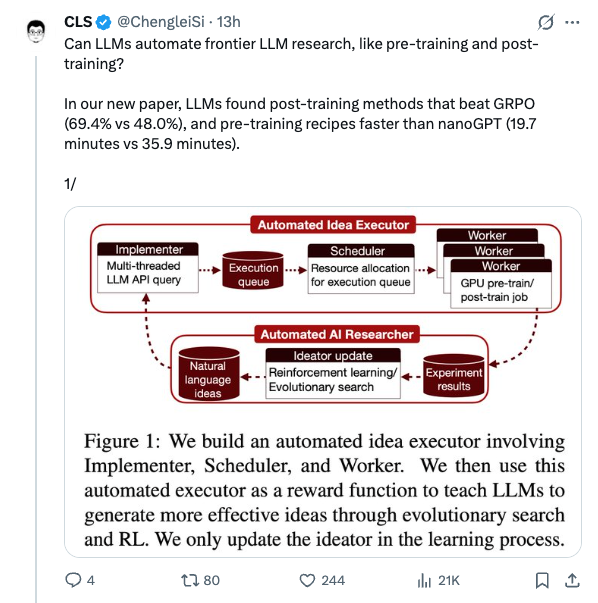

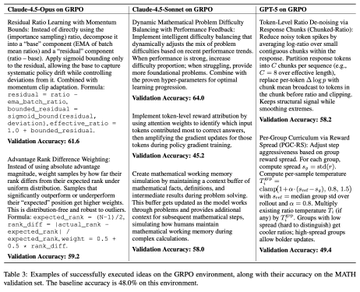

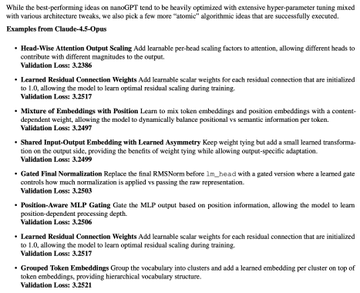

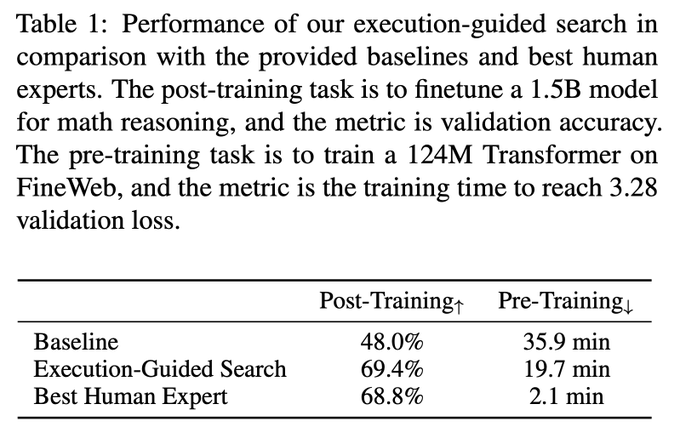

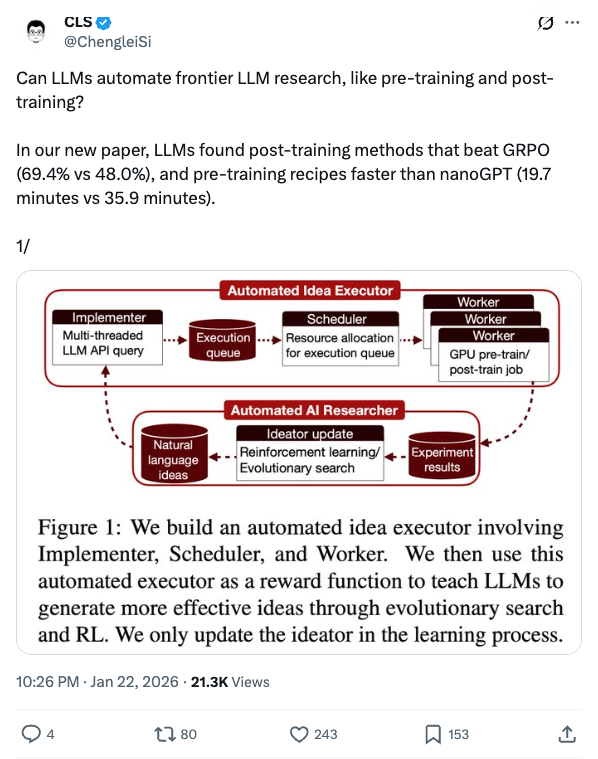

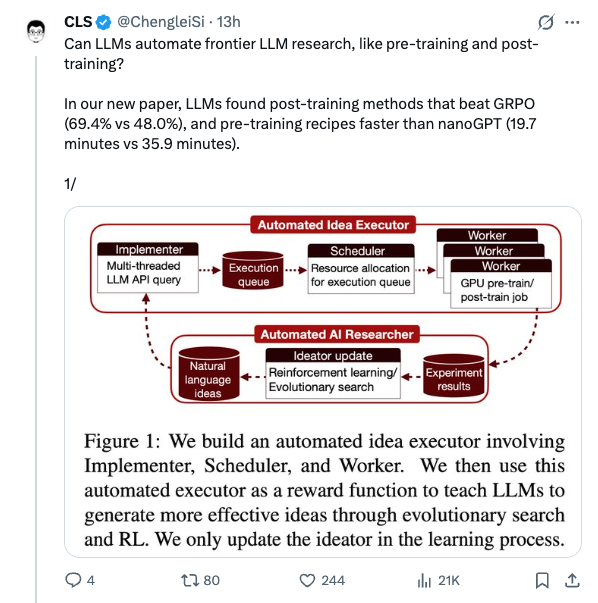

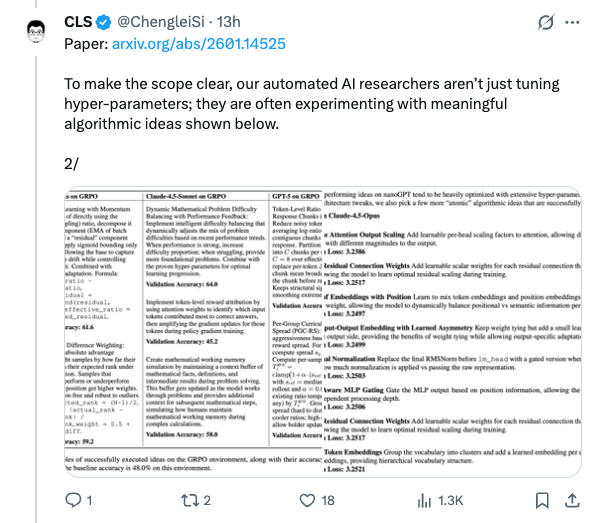

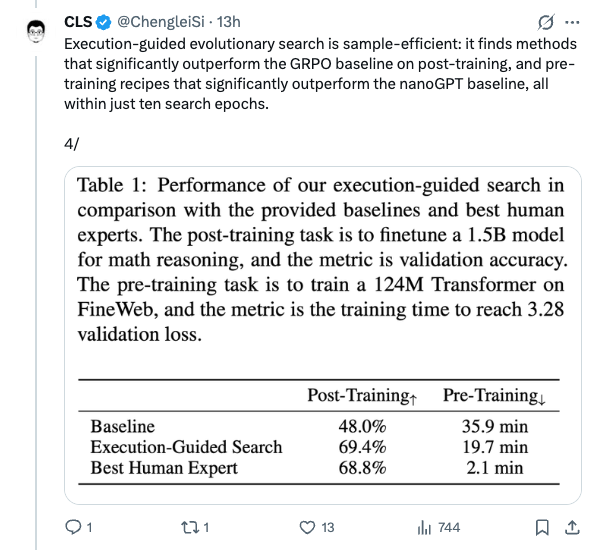

🧵 Can LLMs automate frontier LLM research, like pre-training and post-training? In our new paper, LLMs found post-training methods that beat GRPO (69.4% vs 48.0%), and pre-training recipes faster than nanoGPT (19.7 minutes vs 35.9 minutes). 1/ 🧵 Paper: https:// arxiv.org/abs/2601.14525 To make the scope clear, our automated AI researchers aren’t just tuning hyper-parameters; they are often experimenting with meaningful algorithmic ideas shown below. 2/ 🧵 How did we achieve this? We first build an automated executor to implement ideas and launch large-scale parallel GPU experiments to verify their effectiveness. Then we use the execution reward for evolutionary search and RL. 3/ 🧵 Execution-guided evolutionary search is sample-efficient: it finds methods that significantly outperform the GRPO baseline on post-training, and pre-training recipes that significantly outperform the nanoGPT baseline, all within just ten search epochs. 4/ 🧵 It’s much more effective than just sampling more ideas and doing best-of-N. But models tend to saturate early, and only Claude-4.5-Opus exhibits clear scaling trends. 5/ 🧵 RL from execution reward can successfully improve the average reward, but does not improve the max reward :( The reason is: models are converging on a few easy-to-implement ideas and are avoiding ideas that are difficult to execute (which will get them 0 reward). 6/ 🧵 Read the full paper for many more experiment details, analyses, examples, and our thoughts on future directions. Big thanks to my wonderful co-lead @ZitongYang0 , and our advisors @YejinChoinka @EmmanuelCandes @Diyi_Yang @tatsu_hashimoto for making this project happen. 7/ 🧵 Shout out to @tydsh @edwardfhughes @lschmidt3 @cong_ml @jennyzhangzt @jiaxinwen22 @ChrisRytting @zhouandy_ @xinranz3 @StevenyzZhang @ShichengGLiu @henryzhao4321 @jyangballin @WilliamBarrHeld @haotian_yeee @LukeBailey181 and many others for the helpful discussion. 8/ 🧵 Last but not least, we thank @LaudeInstitute , @thinkymachines , and DST Global for their generous sponsorship of compute resources. 9/9

Thread Screenshots

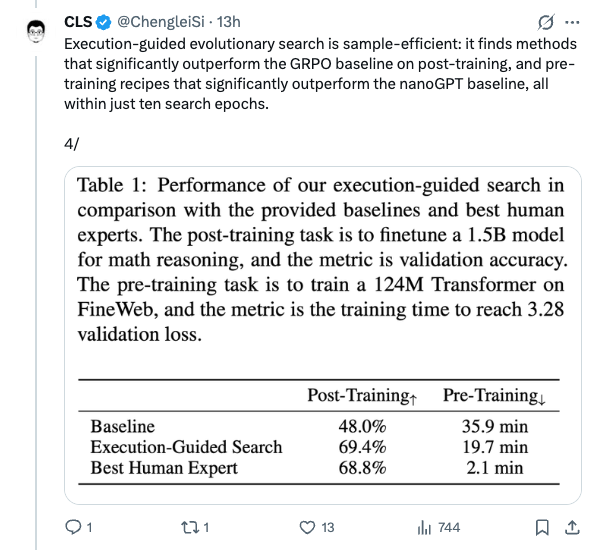

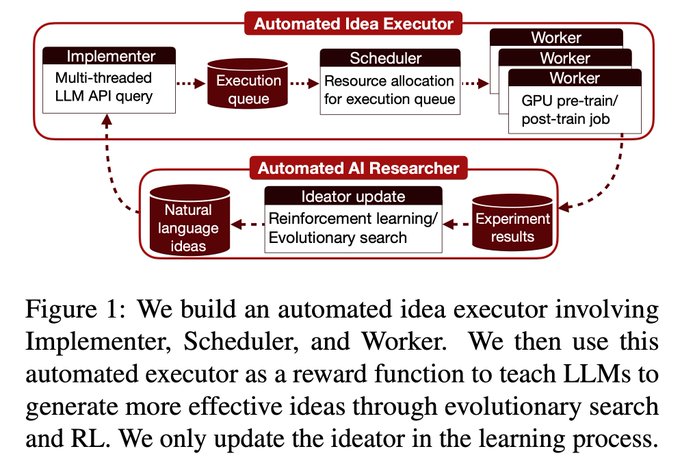

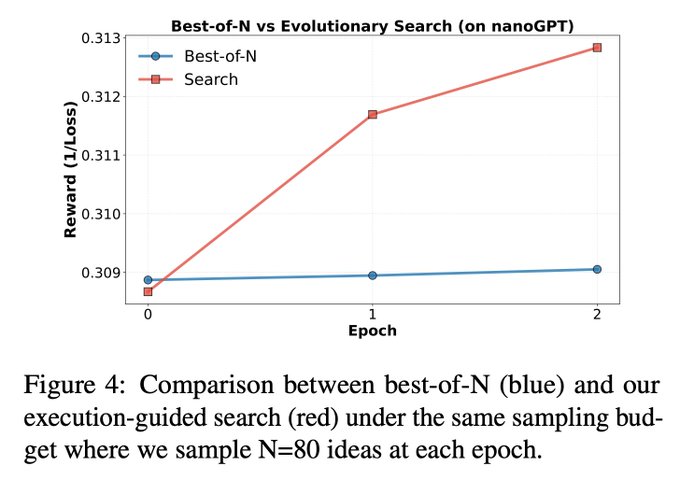

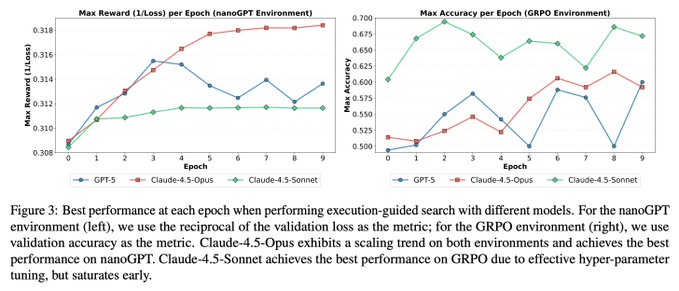

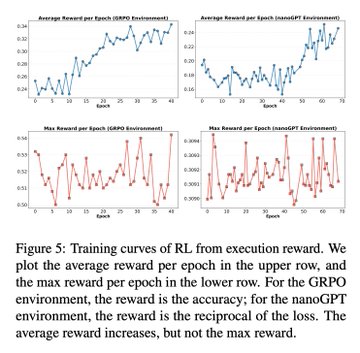

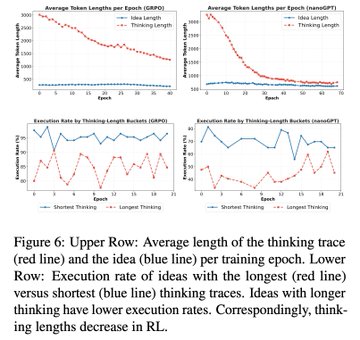

Images