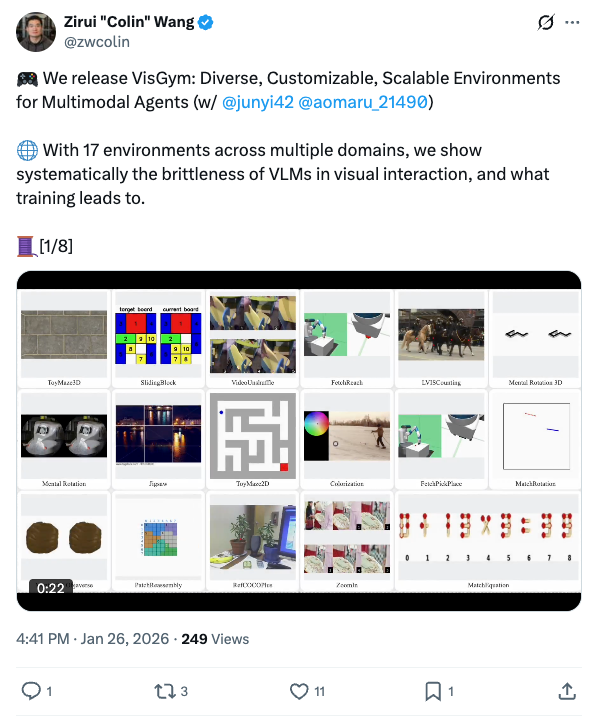

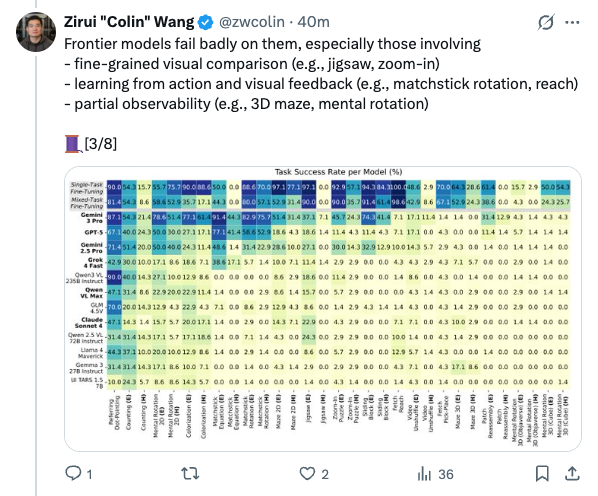

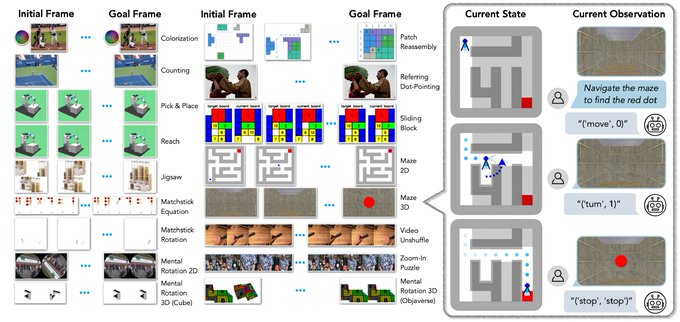

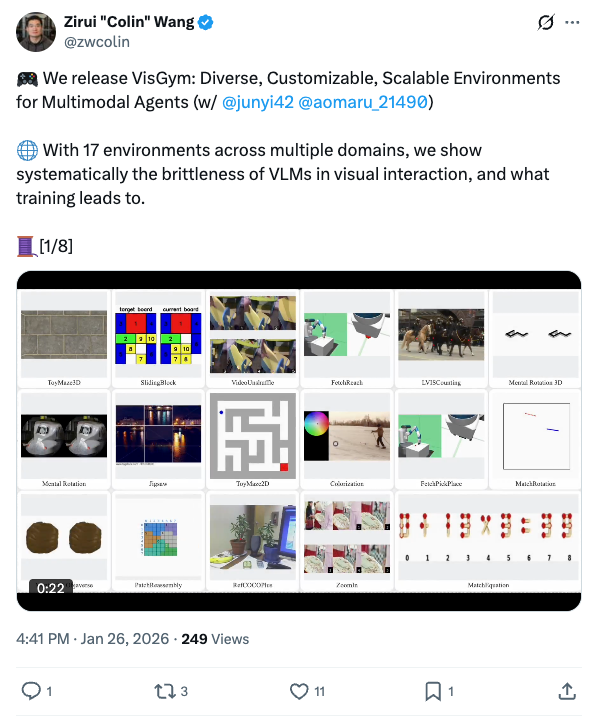

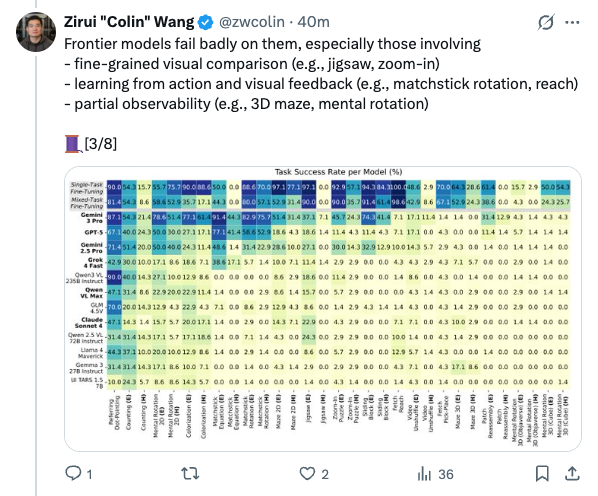

🧵 We release VisGym: Diverse, Customizable, Scalable Environments for Multimodal Agents (w/ @junyi42 @aomaru_21490 ) With 17 environments across multiple domains, we show systematically the brittleness of VLMs in visual interaction, and what training leads to. [1/8] 🧵 Despite rapid progress in visual reasoning with VLMs and success in domain-specific settings like computer-using agents and robotics, we are still overlooking a universal agentic capability: visual interaction. We did the heavy lifting to systematically stress-test it. [2/8] 🧵 Frontier models fail badly on them, especially those involving - fine-grained visual comparison (e.g., jigsaw, zoom-in) - learning from action and visual feedback (e.g., matchstick rotation, reach) - partial observability (e.g., 3D maze, mental rotation) [3/8] 🧵 We find that models frequently - get stuck repeating actions - ignore information from past interactions - terminate episodes without reaching the goal - ignore visual changes in the scene [4/8] 🧵 When designing visual interaction workflows, we find that - unbounded history can hurt performance - text-based task representations often outperform visual ones - adding text feedback to visual feedback helps - providing the final goal image does not always help [5/8] 🧵 In supervised finetuning, we find that - generalization is task-specific, and stronger base models generalize better - both the visual encoder and the LLM need finetuning for visual interaction - teaching models to explore matters more than more oracle trajectories [6/8] 🧵 We hope VisGym can serve as a foundation for measuring and improving visual interaction capabilities in vision language models. Website: http:// visgym.github.io Paper: http:// arxiv.org/abs/2601.16973 Code: http:// github.com/visgym/visgym [7/8] 🧵 Grateful to our incredible collaborators and faculty advisors: @LongTonyLian @letian_fu @lisabdunlap @Ken_Goldberg @XDWang101 @istoica05 @_dmchan @sewon__min @profjoeyg . Special thanks to @OpenRouterAI and @vesslai for their support in evaluation and training. [8/8]

Thread Screenshots

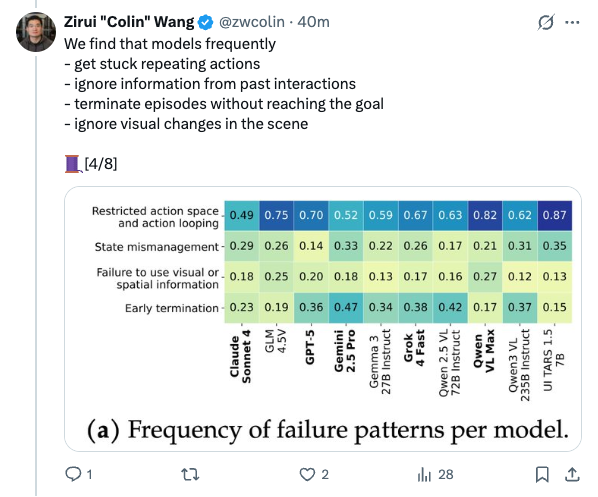

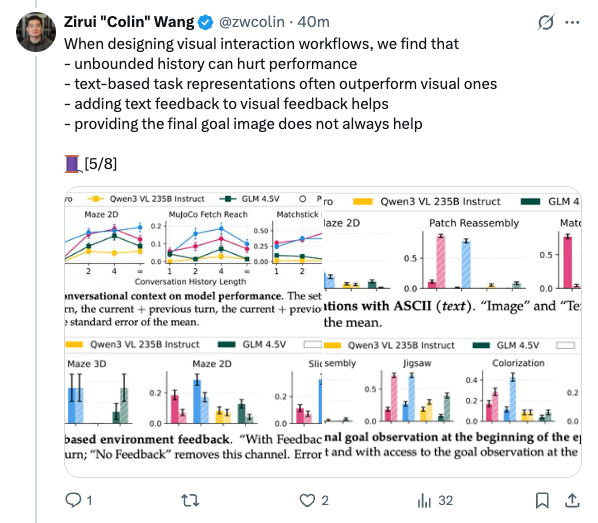

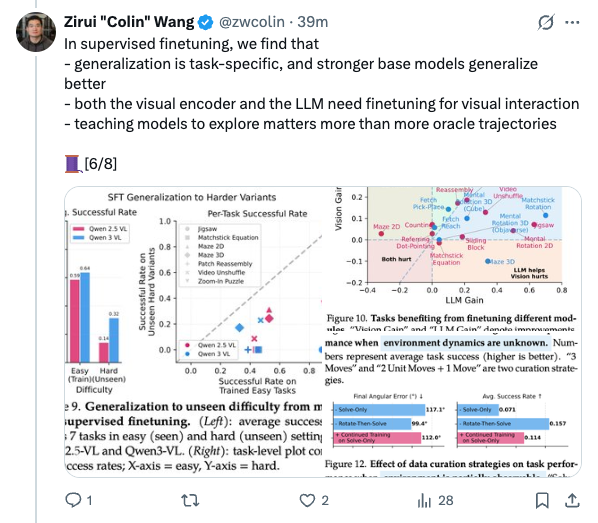

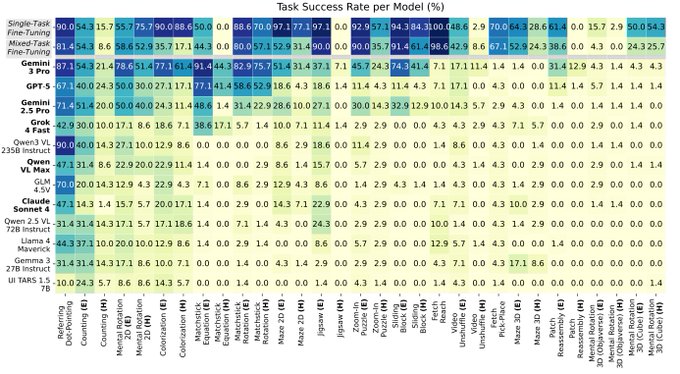

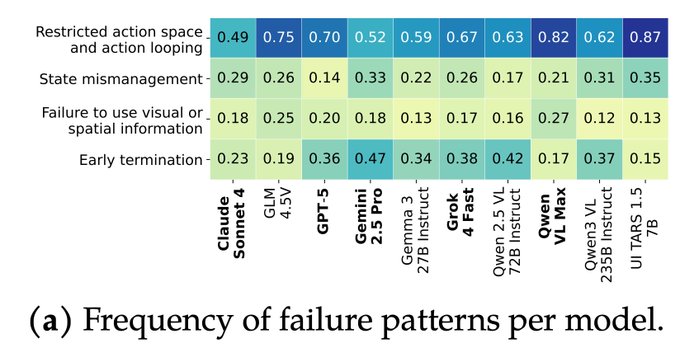

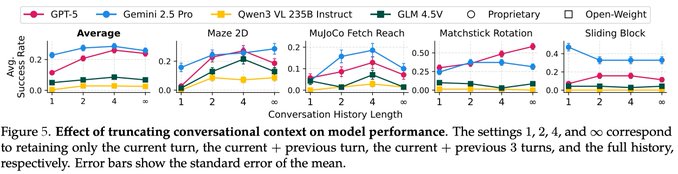

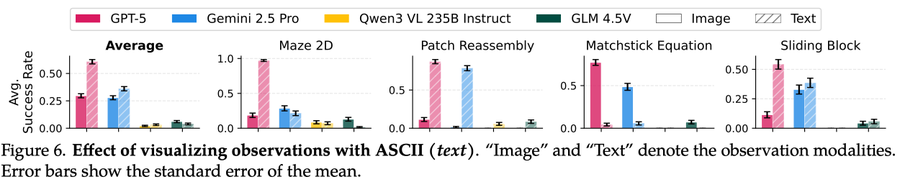

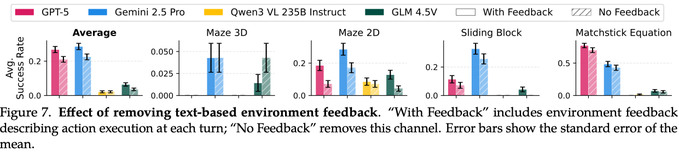

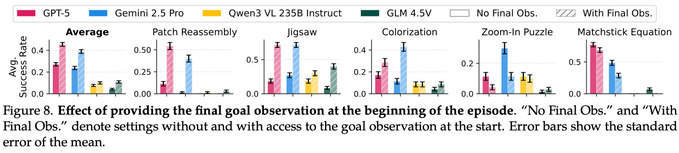

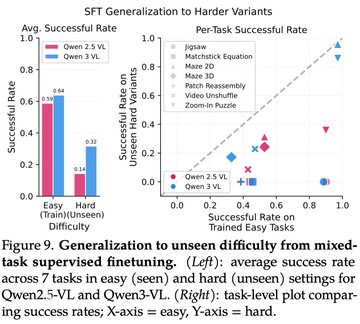

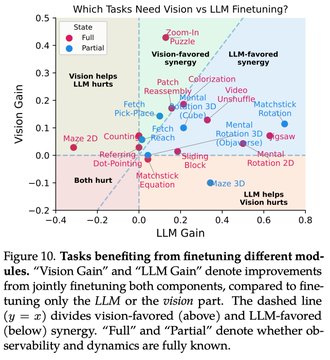

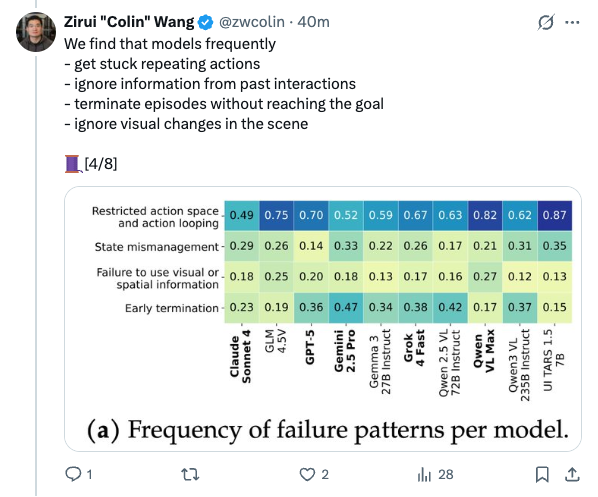

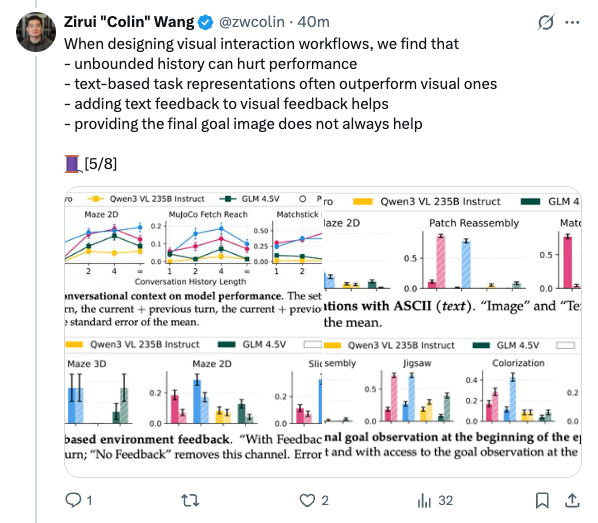

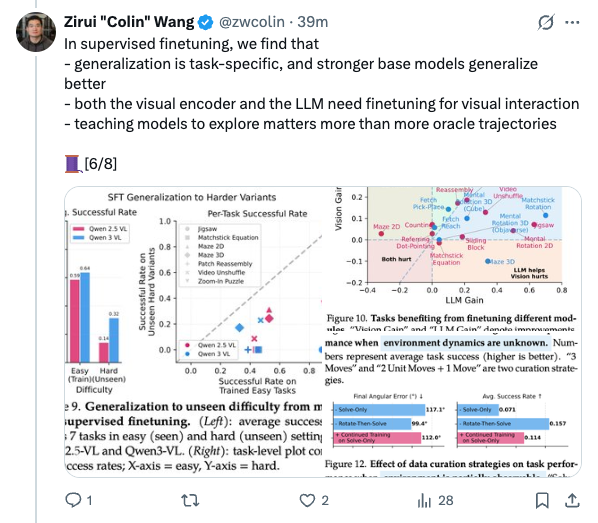

Images