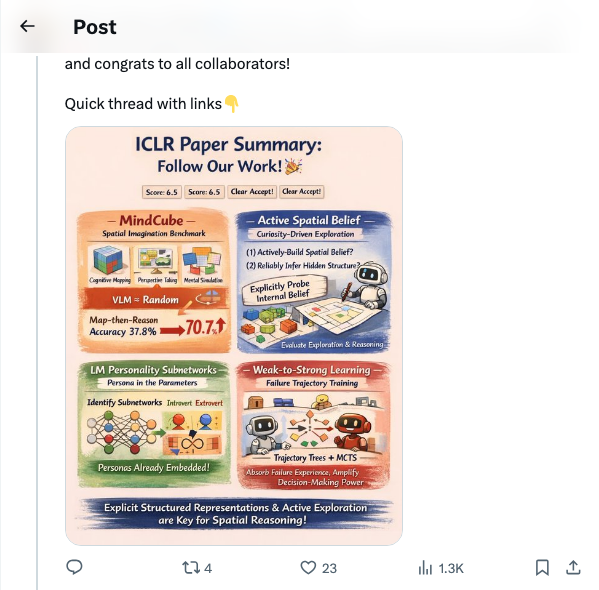

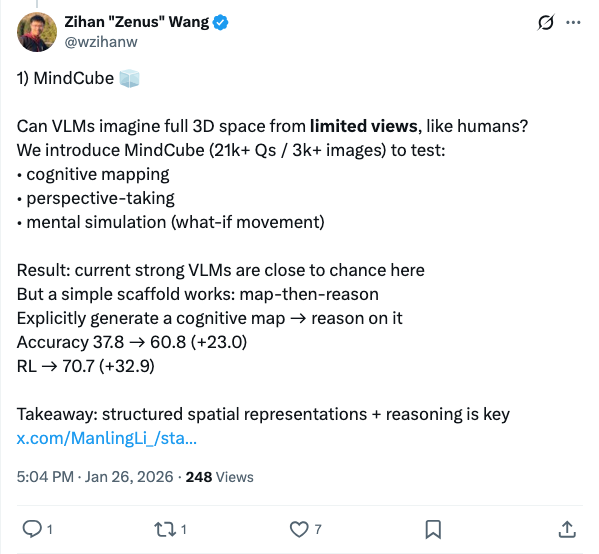

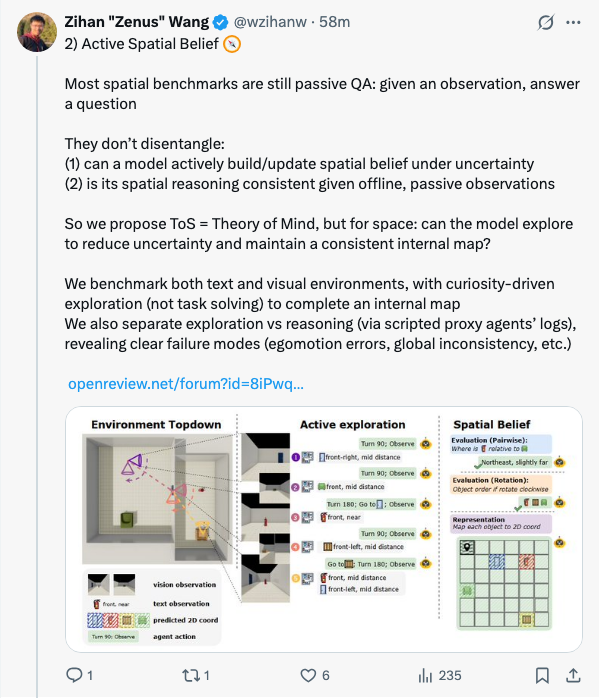

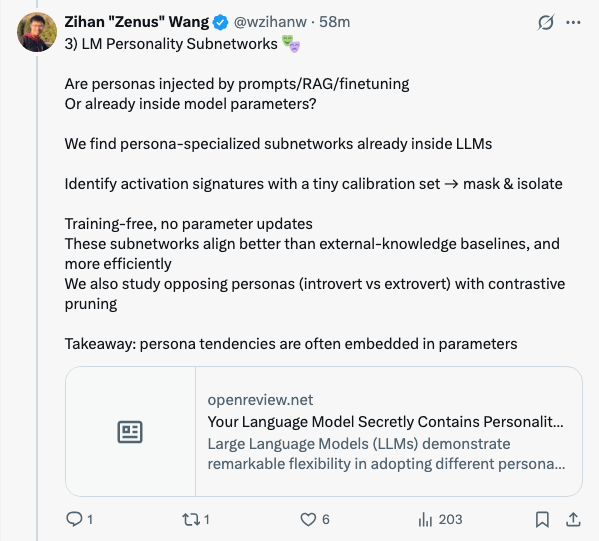

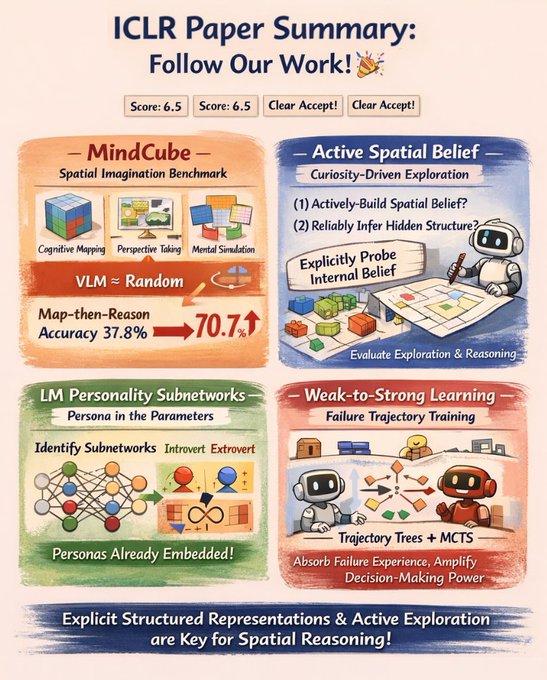

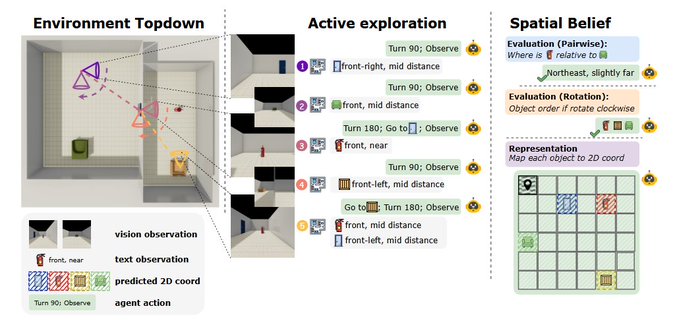

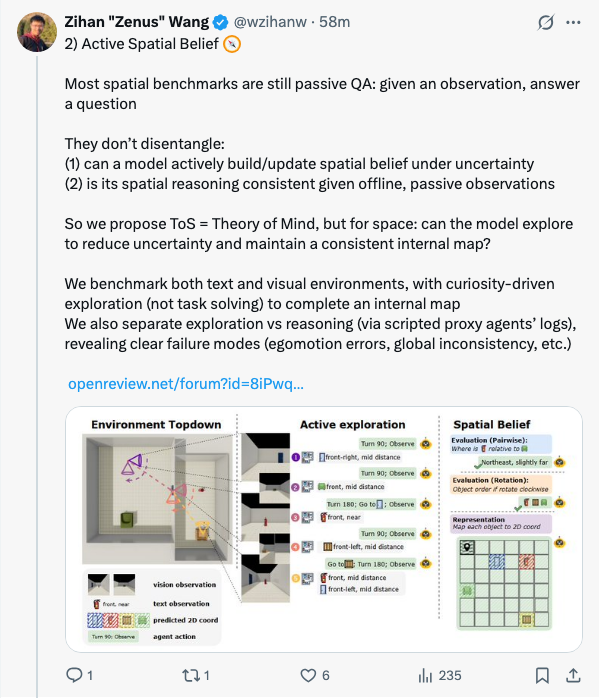

🧵 I have 4 papers accepted at ICLR! Super excited about this milestone, and congrats to all collaborators! Quick thread with links 🧵 1) MindCube Can VLMs imagine full 3D space from limited views, like humans? We introduce MindCube (21k+ Qs / 3k+ images) to test: • cognitive mapping • perspective-taking • mental simulation (what-if movement) Result: current strong VLMs are close to chance here But a simple scaffold works: map-then-reason Explicitly generate a cognitive map → reason on it Accuracy 37.8 → 60.8 (+23.0) RL → 70.7 (+32.9) Takeaway: structured spatial representations + reasoning is key https:// x.com/ManlingLi_/sta tus/1939760677133987952?s=20 … 🧵 2) Active Spatial Belief Most spatial benchmarks are still passive QA: given an observation, answer a question They don’t disentangle: (1) can a model actively build/update spatial belief under uncertainty (2) is its spatial reasoning consistent given offline, passive observations So we propose ToS = Theory of Mind, but for space: can the model explore to reduce uncertainty and maintain a consistent internal map? We benchmark both text and visual environments, with curiosity-driven exploration (not task solving) to complete an internal map We also separate exploration vs reasoning (via scripted proxy agents’ logs), revealing clear failure modes (egomotion errors, global inconsistency, etc.) https:// openreview.net/forum?id=8iPwq r6Adk … 🧵 3) LM Personality Subnetworks Are personas injected by prompts/RAG/finetuning Or already inside model parameters? We find persona-specialized subnetworks already inside LLMs Identify activation signatures with a tiny calibration set → mask & isolate Training-free, no parameter updates These subnetworks align better than external-knowledge baselines, and more efficiently We also study opposing personas (introvert vs extrovert) with contrastive pruning Takeaway: persona tendencies are often embedded in parameters 🧵 4) Interactive Weak-to-Strong w/ Failure Trajectories Weak-to-strong beyond classification → interactive decision-making Key idea: don’t just learn weak model’s successes Learn from its failures too (failure trajectories are informative) We build trajectory trees + combine with MCTS for efficient optimization We also give a formal guarantee for why failure supervision helps W2S Big gains across domains in reasoning + decision-making, scalable setup

Thread Screenshots

Images